| People Detection and Tracking using Stereo Vision and Color (pdf) |

Motivation:

People detection and tracking is an important capability for applications that desire to achieve a natural human-machine interaction. Although the topic has been extensively explored using a single camera, the availability and low price of new commercial stereo cameras makes them an attractive sensor to develop more sophisticated applications that take advantage of the depth information. A system able to visually detect and track multiple-persons using an stereo camera placed at underhead positions is presented in this work. That camera position is especially appropriated for human-machine applications that requires interacting with the people or to analyze the human facial gestures. In an initial phase, the environment is modelled using a height map that will be later used to easily extract the foreground objects and to search people among them using a face detector. Once a person has been spotted, the system is capable of tracking him/her while is still looking for new people. Tracking is performed using the Kalman filter to estimate the position and trajectory of each person in the environment. Nonetheless, when two or more people become close to each other, information about the color of their clothes is also employed to track them more efficiently. The system has been extensively tested on stereo video sequences.Test perfomed

The system has been tested using both 4mm and 6mm stereo cameras. Because of a shorter focal length provides a higher range of vision, there are more people in the tests performed with using the 4mm camera than in the tests performed using the 6mm camera. A selection of the most interesting tests performed is presented below. Nevertheless, the videos of all the test performed can downloaded at http://decsai.ugr.es/~salinas/peopledetectionandtracking/.For some of the test there are two versions. The first one shows the performance of the system when the tracking is only based on the position of each person, i.e., color information about the clothes of each person is not used. Without using color information, the tracking process becomes unreliable when people is closed to each other and it tend to interchanges their identities. It can be noticed because of the ID number assigned to each person when he/she introduces to the system is incorrectly swaped by the ID of another person in some situations in wich they interact very closed. The second version of the video, shows the results of analyzing the same sequence. using both information about the position of the people (as in the previous version) and also information about the color of their clothes. Normally, color information helps to avoid the confusions caused by closed interactions.

Videos:

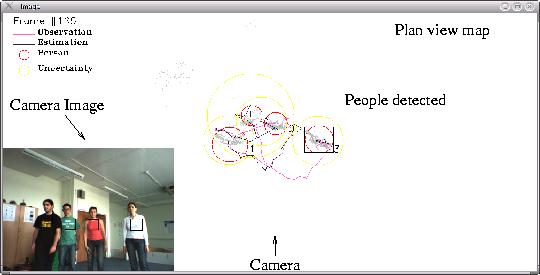

The videos show, in the left bottom side, the images captured by the camera. The rest of the image represents plan view map of the environment, i.e., the occupancy map. The different objects detected can be seen in the occupancy map. Each detected object is represented by a black box. If the system detects that an object represents a person (using a face detector), it assigns an ID number (shown at the right bottom side of its box) and the objects is surrounded by two circles. A red circle indicates that the object is a person, and a yellow circle that shows the uncertainty area in wich it is expected to find this person in the next frame. Notice that when the object is temporarily ocludded, the yellow circle grows. It is because during that period of time the person could be moving and the uncertainty about its position is higher. Additionally, two lines are associated to each person. A magenta line that shows the last position in wich the person was found (observation) and a black line indicates the position estimated using the Kalman filter for that person (estimation). The difference between them is called innovation and indicates how well the Kalman filter is working. Finally, the squares in the chest of each person that appear in the camera image, are the regions seleted for analizyng the color of their clothes.

Test 1) In this test, 4 people introduce themselves to the system and start moving. The video has been recorded using a 4mm focal lenght stereo camera. test_4mm_4people_1.avi, test_4mm_4people_1_nocolor.avi

Test 2) In this test, 3 people introduce themselves to the system and start moving crossing their paths. The video has been recorded using a 4mm focal lenght stereo camera. test_4mm_3people_4.avi, test_4mm_3people_4_nocolor.avi

Test 3) In this test, 3 people introduce themselves to the system and start moving, crossing their paths, jumping, embracing each others. This is avery complicated scene and it can be notices that the system is able to track without error the 3 people. The video has been recorded using a 4mm focal lenght stereo camera. test_4mm_3people_5.avi.

Test 4) In this test, 3 people introduce themselves to the system and start moving. They move fast and collide each others. The video has been recorded using a 4mm focal lenght stereo camera. test_4mm_3people_6.avi, test_4mm_3people_6_nocolor.avi

| A fuzzy system for visual detection of interest in human-robot interaction (pdf) |

The development of natural human-robot interfaces is necessary to achieve intelligent robots applied to service tasks in environments where humans operate. In that sense, a very useful capability for a robot is to be able to detect the interest that the people in the environment have to interact with it. This knowledge can be used to establish a more natural communication with humans as well as to create an appropriate policy for the assignment of the available resources. In this work, it is proposed a fuzzy system that establishes a level of possibility about the degree of interest that the people around the robot have in interacting with it. First, a method to detect and track

persons using stereo vision is proposed. The method uses a height map of the environment and a face detector besides the Kalman filter to detect and track the persons in the surroundings of the robot. Then, the interest of each person is computed using fuzzy logic by analyzing its position and its level of attention to the robot. The level of attention is estimated by analyzing if the person is looking or not at the robot. Although the proposed system is based only on visual information, its modularity and the use of the fuzzy logic make easy the incorporation in the future of other sources information to estimate with higher precision the interest of the people.Videos:

The videos below show the use of our fuzzy system for detecting the interest of the people in interacting with the robot. In the videos several people appear moving in the environment. Each person is identified by a unique number (at the left of the box that identifies the person), while the robot estimate their interest (at the right side of the box).

a) A person moving in front of the robot.

| Multi-agent system for human-robot interaction using stereo vision |

This work presents an expandable system that enables an intelligent interaction between mobile robots and humans. A multi-agent system architecture for controlling the robot movement was used to enable expandability. Human detection and tracking are achieved by means of real-time techniques based on stereo vision. The system proposed is structured in three layers. Agents in the lower layer provide an abstraction of the real hardware used, thus the system can be easily adapted to different robot platforms. The middle layer implements a set of basic behaviors that allows to control the movement of the robot and to keep visual track of human users. In the upper layer, these basic behaviors are combined in a sequential or concurrent manner in order to implement more complex behaviors named skills. The skills designed allow the robot: (i) to detect an interested user who desires to interact with the robot; (ii) to keep track of the user while he/she moves in the environment; and (iii) to follow the user along the environment avoiding possible obstacles in the way. The three skills constitute a minimum set of abilities useful for many mobile robotic applications that requires to interact with human users. Besides, the multi-agent approach employed allows the system to be easily expanded with further functionality. The system has been evaluated in different real-life experiments with several users, achieving good results and real-time performance.Videos:

The videos below show the proposed multiagent architecture running on real-time on a PeopleBot robot equipped with a laptop computer and a stereo visual system (Bumblebee camera) mounted on a robotic head. All the videos are available at http://decsai.ugr.es/~salinas/humanrobotvideos/. They are divided into three types depending on the skills that is tested on each one. As there are a lot of videos available, the most intereting ones have been selected here.

a) FacePerson Skill: this skill allows a mobile robot to face a person, i.e., to orientate itself to keep continous track of the person.

t3_v1_up.avi: This video shows how a person approachs to the robot to start an interaction. Once the person is detected, the robot start tracking him but without performing any translation. The robot only turns overr itself. The video has been recorded from a camera in the ceilling.

t3_v2_robot.avi: As in the previous video, this one shows how a person is tracked by the robot. This time, the video shows what the robot seen (the robot's view).

t3_v3_human.avi: This video shows the same scene than the two previous but this time seen from the person's view.

t4_v1.avi: This video shows the use of the FacePerson Skill, but this time adding a little difficulty. The person is surrounded by other people.

t4_v4.avi: This video shows the same scene than the previous video but with a different person.

b) FollowPerson Skill: this skill allows a mobile robot to move following a person.

t5_v9.avi: Another video showing the ability of our architecture to follow a person.

t5_v8.avi: This video shows the ability of our architecture to follow people. In the video, a person introduces himself to the robot and start lead the robot along the laboratory. During the test, a second person is in the lab and moves freely even crossing his path between the first person and the robot. Nevertheless, our system is able to keep continous track of the first person and avoid confusions.

t5_v011.avi: In this video the robot follows a person and avoid obstacles in the way.

t5_inside_v0.avi: This video shows the robot's view while following a person. In the video the person moves in the lab and the robot keep track of him even in the presence of other people. Please notice the hard illumination conditions that takes place during the test. Despite them, the system is able to robustly keep track of the user.